The other week I gave an overview of how AI is currently being used by police in criminal investigations. In that it was briefly mentioned that algorithms can be used to some extent to predict where violent crime might strike next. With that information, policing can be increased in at-risk areas to help prevent tragedy. While it was only very recently that AI has been applied to and shown potential in this field, crime mapping and crime forecasting have been around for a while.

The first instance of crime mapping is often credited to Adriano Balbi and André Michel Guerry, two French researchers who drew a correlation between the education levels of certain areas and violent crime in France in 1829. Later on in the early 20th century, other researchers went further and began to analyze the relationship between instances of juvenile delinquency and the social conditions of their communities. While this correlation was noticed early on as both urban areas and poverty began to rise, modern-day research continues to show the same results. Take, for example, this compilation of some interactive maps that show the link between crime rates and socioeconomic status in Toronto.

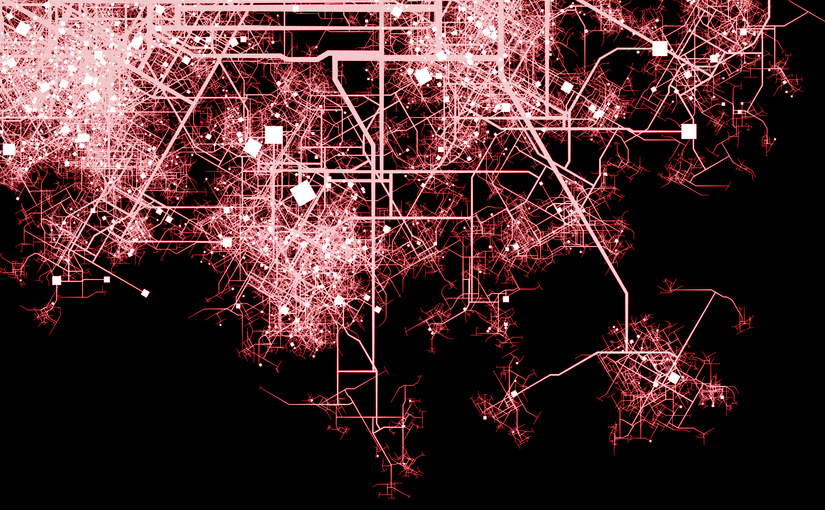

This relationship is well-known and well-studied, but arguably the first application of a data-driven approach to criminal justice on a wide-scale level wasn’t until the 1990s. It started with the NYPD, where Chief Bill Bratton implemented a system called CompStat. CompStat is short for “Computer Statistics,” and was created with the intention of using data to assist in policing and help hold police accountable by tracking their reports. The International Association of Chiefs of Police summarize the CompStat process in one statement: “Collect, analyze, and map crime data and other essential police performance measures on a regular basis, and hold police managers accountable for their performance as measured by these data.” CompStat relies on spatial and temporal patterns to help police predict crime and ideally take preventative measures. While the data from CompStat is essential to this form of policing, the statistics still require interpretation and plans of action, often done via things like crime strategy meetings.

Today CompStat is still used widely across the US since it was first introduced in the 90s. While some data has shown that CompStat helped reduce crime levels significantly, other research has since indicated that there is no evidence that this is true. Economist Steven Levitt posits that there were many other factors that contributed to the sharp decline of crime rates in the 90s that were unrelated to CompStat. There are even reports that police may be underreporting serious crime as a result of the pressure from CompStat to continuously reduce crime year after year. In recent years people have begun to doubt the usefulness of data-driven approaches to criminal justice.

However, crime forecasting is still growing more sophisticated as machine learning and algorithms have been introduced to the process. As mentioned in my previous blog, this approach is also riddled with potential pitfalls—from racial bias to an increase in non-violent arrests in an area as a result of the algorithm’s predictions. The 2022 model created at the University of Chicago seems to show promising accuracy, but the implementation of AI should always be used with care. It’s unclear how helpful AI could be to crime forecasting specifically since the field is still so new.

The connection between a location’s socioeconomic status and its crime rates is clear, but it’s important to remember that crime is a complex issue. It is not as simple as knowing where criminal “hot spots” might be and increasing police presence there. After all, policing is often reactionary and deals little with the root of the issue—namely, the socioeconomic status that led to the crime rates in the first place. Clearly, this is not something that can be solved with data, statistics, or even machine learning alone.